Web Application Monitoring Best Practices

It’s quite embarrassing to receive bug reports from your loyal users. Not everyone will take the time to contact the support and report an issue. Most will suffer or even churn.

A robust monitoring system is the only reliable way to know about production issues and fix them before they are a real problem. You have to collect all critical logs and metrics to be able to debug what happened. Alerts should notify you when something goes wrong, and they must be more reliable than a swiss watch. Missing any of them will hurt, so you also need a well-tested reaction process.

Let’s dive into best monitoring practices a bit deeper.

Table of Contents

Don’t Reinvent the Wheel

Creating a monitoring solution might seem easy at first glance. Just send an email when something goes wrong, and that’s it.

As an application grows, monitoring becomes much more complicated. Aggregating repeating errors into one alert, sending the daily digest, collecting issues from multiple microservices, frontend monitoring, data visualization, etc.

Today, people build software on the shoulders of giants. Using various open-source packages, reliable databases, version control systems, and web servers is the norm. Monitoring solutions aren’t that different. Many proven open-source and proprietary solutions do everything you might ever need in your application out-of-the-box.

Aim for End-to-End Monitoring

Some people think that server monitoring is much more critical. That might be the case for some mission-critical software, but the users see the frontend of an application, not the backend.

It’s good to know that backend works fine but knowing that users have a smooth UX experience is also essential. In modern applications, the frontend is also very complex, and complexity is the number one reason for bugs. Most frontend developers trust the backend too much, so even slightly invalid data may cause huge problems.

Monoliths are rare nowadays, and with distributed microservice or serverless architecture, it’s not that straightforward to track what caused the issue in the first place.

Advanced monitoring tools, like Aspecto, will auto-magically show you the whole request flow along with all the details that happened along the way, like requests to other microservices, SNS messages, and database calls. If you’re using a different monitoring tool, at least log the same request-id across all the services. This approach will allow you to find everything related to the request manually. Ideally, this ID should come from the frontend.

Collect Meaningful Logs

In our opinion, writing useful logs requires a lot of experience.

Everyone knows that you must keep your logs relatively clean to find useful insights there quickly. Besides using different log levels, try to think about how you would debug that application using these records.

A million “got a request” lines won’t help at all. What request? What details? Even for info level logs, add at least some context to link them with the rest. Warning and Error logs mean something isn’t working as expected. These should capture as much context as possible, including arguments, steps that went good and all the details about potential failure.

We suggest using structured logging everywhere. It’s a tiny bit of upfront setup effort that pays out in the long run. Unlike text logs, searching structured records is a breeze that saves countless hours on digging in the logs.

Of course, every piece of the application should send logs to a single monitoring system. Having them scattered all over the place would make further investigations a nightmare. Don’t forget that you can’t access the user’s browser console.

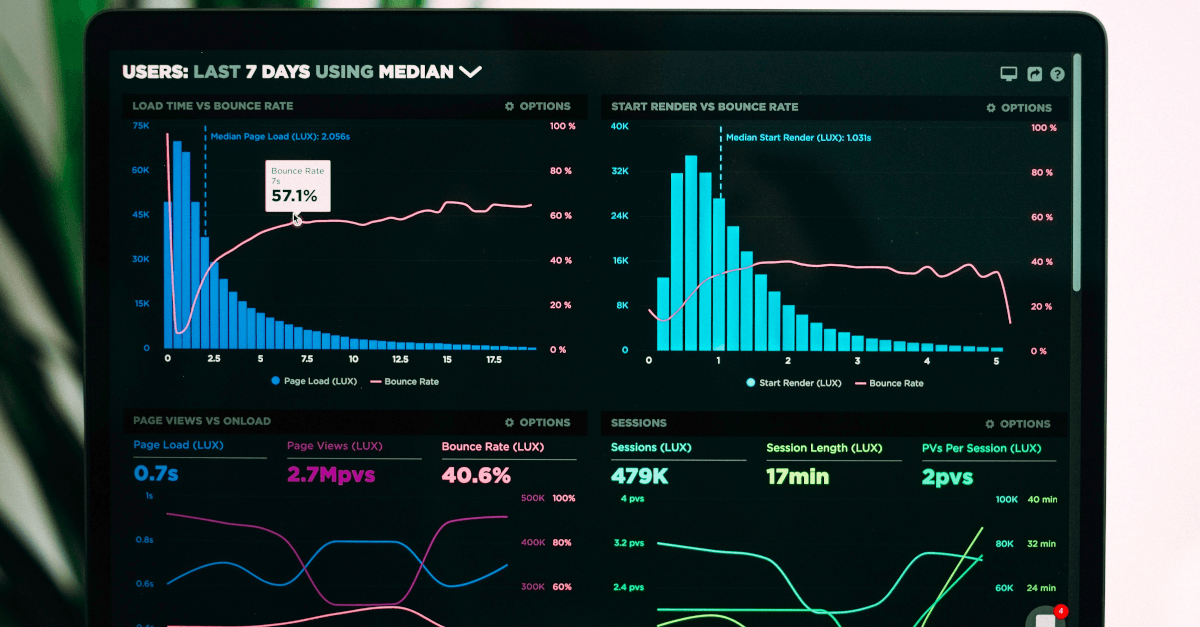

Setup Monitoring Dashboards

Now that you have useful logs in place, it’s time to make a dashboard. You need a concise visual representation of your application’s health, performance, scalability, and other vital metrics.

Think of a dashboard in your car. It displays the current information, like speed, fuel level, and engine RPMs that you check from time to time. There are also indication lights that signal an issue, like low fuel, the battery isn’t charging an engine problem. You don’t have to be a professional mechanic to know that something’s wrong with the car when one of them lights up.

The dashboard is much more convenient than having an infinite list of metric updates mixed with alerts. By just a single glance at a well-designed dashboard, you will know where’s the problem, what parts of the system are working as expected, and what needs further investigation.

Car mechanics use diagnostic tools for troubleshooting with a more detailed view of the car systems. You can create similar dashboards for critical microservices in the application and use them to find the problem even faster.

Configure Actionable Alerts

Don’t rely on people to log in to the monitoring system and check the dashboards all the time. It’s inefficient, tedious, and error-prone. A machine will do it much more reliable. People should be informed about the problems automatically as they occur.

You can configure alerts in every modern monitoring system. That’s already a vast improvement compared to manually checking the dashboards from time to time. Emails should be informative enough for people receiving them to take the right actions.

In large projects, different people or even teams work on separate parts of the application. Ensure that the alerts go to the correct recipients, as spamming them to everyone will induce people to ignore them all the time.

The alert should be well-structured and contain all the helpful information about the problem, including:

- The severity of the issue

- Implications from the business logic side - what didn’t happen and who is affected

- What action caused the alert in the first place

- Links to see the details, logs, and ideally the whole flow that led to unexpected behavior

- Instructions for the person receiving it on what to do next

This information will help to identify and resolve the problem quickly and reliably.

When it comes to alerts, less is more. They should be rare, an exception, not the norm. People are more likely to treat the notification seriously if it happens, for example, once a week and not multiple times a day. You want a high signal to noise ratio, so use all your monitoring system capabilities to get only the useful information.

Repeated alerts that have already been triggered are one of the examples. Just don’t forget to re-enable them once the issue has been fixed to make sure it indeed was.

Another example would be multiple alerts about the same, even from the different systems in your application. When something goes wrong, it usually starts a chain reaction that involves the frontend and multiple microservices. Advanced tools understand this and can filter them too.

No News is NOT Good News

You’ve successfully set up the monitoring of your application and haven’t received any issues in weeks. Good scenario, right?

It’s a trap! While not having issues in the application is excellent, receiving no alerts is not always that good. It’s unlikely that complex applications don’t have bugs. Large tech corporations even have separate teams and procedures dedicated to avoiding and fixing them in production. Usually, new features are rolled out to a subset of users and continuously monitored for failures. Even after full deployment, the DevOps might still decide to roll back parts of the application if they receive critical alerts.

Systems may fail, and the monitoring system is not an exception by any means. If you were receiving alerts once in two weeks on average, you shouldn’t celebrate two months without them. You should be worried. There might be at least one problem - and the problem is your monitoring system or its configuration.

Knowing that no news is not good news is the solution. First of all, you can configure the monitoring system to send a daily digest. It can be a short overview of what’s going on in the application. Not receiving this is a big red flag. You shouldn’t log in to the system from time to time to look for problems, but it’s a good practice to frequently visit the dashboard and ensure that it displays valid data and all the metrics are up to date.

Use Informative Metrics

Unexpected conditions within your application would trigger the most critical alerts. However, some signals would be based on metrics.

For example, you would like to know that your backend endpoint has consistent performance and users have a good experience when saving items within the application. The first metric that comes to mind is the average response time of the save endpoint. You probably want this to be, let’s say, lower than 200ms every minute.

This alert is pretty straightforward to set up in most monitoring systems, so you’ve done it. The alert never triggered, so everything must be OK. But is it?

What if, during some random minute, the endpoint processed 100 requests. Ninety of them took 50 milliseconds, but at some point, the queue built up, and five calls took 500 ms, and the last five were somewhere between a second and two seconds. Average latency would still be in the 120 to 170 ms range, but 10% of requests were out of range, and 5% of users had no the best experience.

The percentile-based metrics would be a much better choice for this alert. Keep in mind that top 1% and 5% metrics will often be much higher than averages. We would suggest using the 5% latency > 200 ms rule for this alert. Creating another trigger with something like 1% latency > 1 second would monitor the edge cases when the backend gets pretty overloaded while still avoiding spamming too many alerts.

Have Reaction Processes In-Place

Now that you have dashboards to monitor the situation, structured logs to quickly find the cases of problems, and informative alerts that notify the team that something goes wrong, it’s time to set up reliable reaction processes to fix issues asap.

We’ve already mentioned that alerts should go to the correct receivers and not spam irrelevant people. Someone in each team should be accountable for handling the issues. Usually, it’s the team lead cause he has the best high-level understanding of the application, can evaluate the severity, and knows who in his team can quickly tell what’s wrong and fix it.

People are less reliable than machines, so ensure that everyone in the team understands the priorities and can switch to critical tasks as soon as they arrive. Most alerts won’t be that urgent and can end up in a backlog, among other tasks. But if something is completely broken, you should switch all your resources to fix it.

Ideally, this shouldn’t happen at all, but there’s probably something wrong with the delivery process if it does and does so frequently. Developers should take ownership of the features they implement, should perform more testing, and you probably need a QA.

Test the Monitoring Setup

Just like the application itself, monitoring also needs testing. It’s not enough to test if the dashboard works, alerts are coming, and logs are there. You have to try the whole process in close to a real-world scenario.

We don’t suggest introducing bugs into the system deliberately, but secretly messing up some data for a test user and let the team find and fix it would be an excellent exercise for them. You’ll test the alerts, check if the dashboards also showing the issues, ensure that logs are useful enough to find out what’s happening, and the team doesn’t always blame the code that’s failing and capable of finding the root cause.

There are, of course, other ways to test the whole process. Messing with the data won’t be ideal in all scenarios as the team might have access to sensitive data.

The point is - you have to test the whole process at once, from alert to deployed fix, not only random parts of it.

Conclusion

Reliable monitoring will considerably improve the product quality, allowing your team to know and fix bugs before they become an issue for the users.

The setup might seem easy at first, and you might even be tempted to develop an in-house solution for it. Trust us, it will eventually grow, and even some of the best proprietary products that provide everything you could imagine out of the box would require some manual coding to cover all the cases.

Honestly, we don’t do the complete setup for every project. Sometimes, it makes no sense, or the client has budget constraints.

Structured logs are essential for every application as it’s quite hard to find what’s going on without them. Dashboards are critical for complex systems, with lots of moving parts like various databases, microservices, queues, etc. For consumer-facing applications, alerts are the only reliable way to get bug reports, while with internal applications - the employees will tell if something isn’t working as expected.

We wish you fewer bugs, as zero is just unrealistic. Feel free to contacts us with any questions.