Load Time Optimization Techniques for React Apps

Making your application fast is crucial. It scores higher on Google and probably search engines, but more importantly, it provides a much better user experience, especially on mobile.

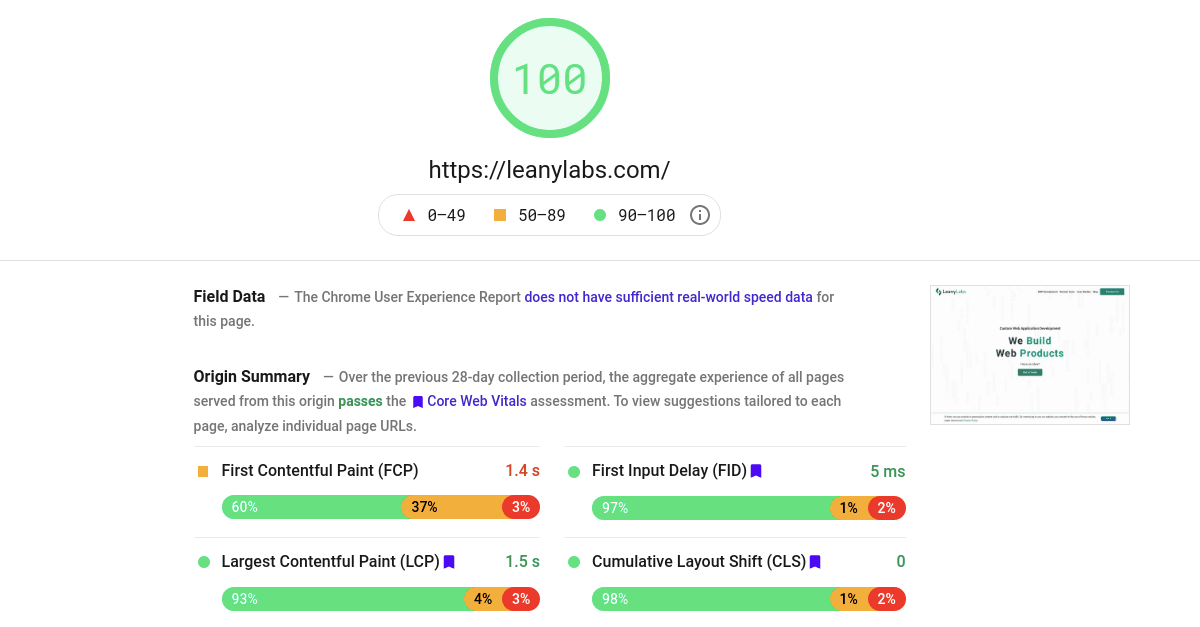

Google’s PageSpeed lets you analyze your application, find performance bottlenecks and later verify if the fix is working as expected. It primarily covers the load times, so we’ll also focus on it in this article. If you’re interested in optimizing the runtime performance of React applications, “21 Performance Optimization Techniques for React Apps” has some excellent tips.

Load time consists of downloading resources, processing them, and rendering the DOM. That’s what you have to optimize in your project. Traditional websites are usually bottlenecked by the network bandwidth, while in rich web frontends, JavaScript processing time might become a problem, especially on slower mobile phones.

Table of Contents

- Set the Cache-Control Headers

- Serve Everything From a CDN

- Leverage Server-Side Rendering

- Optimize SVGs

- Use WebP

- Favor Picture Element Over Img

- Use Img for SVGs

- Lazy Load Images

- Optimize Other Assets

- Avoid CSS-in-JS

- Leverage Code-Splitting

- Optimize NPM Dependencies

- Self-Host Your Fonts

- Add Link Headers

- Load External Scripts Asynchronously

- Test Everything

- Conclusion

Set the Cache-Control Headers

The Cache-Control header

instructs the browser and proxies on how to cache your resource, avoiding multiple requests to your server.

While it doesn’t affect the first load time, having cached resources will drastically reduce subsequential loads.

They won’t be fetched from a network but read from a fast local cache instead.

CDNs also respect this header, meaning resources would be stored on edge locations and served to the users much quicker.

Be extremely careful as setting long expiration times will prevent everyone from fetching an updated version of the resource!

When using a webpack with a decent configuration, it’s usually safe to set high expiration times for images, CSS, and even JavaScript files. Webpack will add hashes to the names, so the updated asset will have a new name, meaning it’s an entirely different resource from a browser’s perspective, and everything would fine.

However, keep a relatively short expiration for the entry index.html file. It will have the script tag pointing to the current version of your application, so it has to be updated for users to get a new version.

You can also implement a custom updater logic that will poll the server for a newer version, show the notification and update the application when the user confirms. For products that frequently stay open in the user’s browser, like Gmail, it’s a must. Without this mechanism, even with short cache times, the user has to open your application again for it to update.

Serve Everything From a CDN

It might sound like a no-brainer, but you should be using a Content Delivery Network (CDN). It serves your assets from a geographic location closer to the user, which means higher bandwidth with lower latencies. They are optimized for speed and usually don’t cost that much.

CDNs can also proxy and cache your API calls if you need that and usually respect the

Cache-Control headers allowing varying expiration times for every resource.

You can even configure modern ones to ignore specific query parameters or cache based on cookies.

Some of them can enrich your requests with custom headers like the original IP address or IP geolocation.

For static assets, we sometimes use Netlify CDN. They have a very generous free plan and super-easy setup. Cloud providers have their CDNs too. They let you stay within the same infrastructure and save on bandwidth usage. Of course, you can use a different CDN if it better suits your need.

Leverage Server-Side Rendering

With typical client-side applications, the browser has to fetch, parse and execute all the JavaScript that generates the document that users would see. It takes some time, especially on slow devices.

Server-side rendering provides a much better user experience as their browser would fetch a complete document and display it before loading, processing, and executing your JavaScript code. It’s not slowly rendering DOM on the client but instead only hydrates the state. Yes, the application won’t be interactive straight away, but it still feels a lot snappier. After the initial load, it will behave just like a regular client-side app, meaning we’re not going to the old days of server-side code that wastes server resources on rendering that’s possible on the client.

PageSpeed loves this as it will significantly decrease First Contentful Paint and Largest Contentful Paint, one of the main criteria for the total score. Some search engines, social networks, messengers, and other applications don’t run JavaScript when fetching pages. They won’t find the Open Graph tags and Structured Data if it’s not in the initial document, providing poor user experience when, for example, sharing links to your website.

Server-side rendering by itself is still relatively slow and consumes some resources. You probably want to cache your pages longer than you would simple index.html with an entry-point. Some frameworks, like Gatsby, eliminated this problem by just pre-rendering the whole website into static HTML pages. It works exceptionally well for relatively stable websites, including e-commerce, where most of the content changes less frequently. However, it’s not the best approach for highly dynamic websites and web applications that heavily rely on user input to render content.

Adding server-side rendering to an existing project is not a trivial task.

It requires a separate webpack config and node.js entry point.

You have to fetch all the data before rendering it as it’s now synchronous.

React useEffect hooks, and lifecycle methods won’t work either.

On top of that, memoize libraries like reselect may cause extensive memory leaks on the server if misused.

You’ll have different redux stores for every request, and memoizing selectors is often not a good idea.

There are many frameworks built with server-side rendering in mind, for example, Next.js. They will handle most of the complexity, enabling you to program the application and not boilerplate code. Of course, every framework has its cons. With Next.js, you’re forced to use their router and their link component, which is pretty constrained compared to react-router that can do anything.

Even if you decide to go with an in-house solution,

keep in mind that stream rendering is superior to dumping a string in the node response stream.

Not only it saves server memory, but it also allows the browser to start parsing HTML asap, reducing rendering time even more.

PageSpeed and, most likely, Google search also love low server response times and will rank your pages higher.

In React, you’ll have to use renderToNodeStream instead of renderToString.

Keep in mind that some libraries, like react-helmet, won’t work with this approach.

Optimize SVGs

SVG images are pretty widespread nowadays. They are scalable and look good in any dimensions, and their file size is often tiny compared to the same raster image.

However, SVGs may contain lots of junk that isn’t used for rendering while still downloaded by the client. Some tools let you optimize that away automatically. We use SVGOMG to optimize SVGs manually. For projects with lots of vector graphics, we incorporate SVGO into our workflow to do this automatically.

Use WebP

WebP is a new image format proposed by Google to reduce the file size with minimal quality loss. It supports both lossy, like JPEG, and lossless, like PNG, compression, and lets you save a lot of bandwidth while making your users and Google happy with faster load times. Typically, pictures are 25-35% smaller in WebP compared to the same quality in JPEG. It even supports animations like GIFs.

The browser support is already there, according to caniuse, covering more than 94% of users. All major browsers, except old IE 11, perfectly render it.

We usually use Squoosh to convert images manually or add conversion using Sharp to our workflow to do this automatically.

Favor Picture Element Over Img

Most applications and websites nowadays feature responsive designs, which usually change the layout and styles of elements on the page. It doesn’t alter assets, like images. Users will wait for huge photos intended for the desktop to load on their small mobile devices.

That’s not ideal, and one way to fix it would be to use picture tags

instead of img.

You can set multiple source elements for different sizes, and the browser will choose the most appropriate one.

It’s also possible to offer alternative image formats when, for example, WebP is not supported by the browser.

According to caniuse, a picture element has more than 95% support.

Even when it’s not supported, the required img inside will work as a fallback.

By the way, it’s also used to style the image.

Use Img for SVGs

Inline SVGs are great! You can animate them, style them with simple CSS, and they are lightning-fast to render on the client.

Things are a bit more complicated with server-side rendering, though. If you have duplicates on the same page, they will be inlined multiple times, increasing the HTML size. The server has to render them, and they will cost some bandwidth. And they can’t be cached or reused and will be duplicated in the JavaScript bundle for client-side rendering.

You’ll have to make them regular img tags to solve that issue.

Yes, you will lose the ability to style or animate them, which might be critical in some cases.

However, you can still change the color of monochrome icons using CSS filters.

There’s even a tool to help with that.

Lazy Load Images

Why load images that your users don’t see? You can save some bandwidth on page load by making your images lazy-loaded. Those would be loaded when they are in the viewport or near the scroll position.

Modern browsers support that by simply adding loading=lazy to the img.

The browser support isn’t perfect, but other browsers will ignore this attribute and treat it as a typical image.

You can implement lazy-loading for all browsers using IntesectionObserver, but we don’t see the absolute need to do that at this time.

You can read more about the techniques in this article.

Optimize Other Assets

While images are the heaviest assets in many websites and web applications, don’t forget about other resources!

CSS should be minified and clean. You can use tools to automatically removes unused CSS with tools like purifycss, but that’s only hiding the problem, in our opinion. Much better to clean it manually and keep it clean. You can’t do that with third-party frameworks, though, and purifying them is sometimes the only option.

Video files are enormous and worth optimizing, especially if you play them in the background. People expect videos to take some time to load, so it’s not a big deal when the user has play controls. For autoplay videos, consider using a nice poster that provides some information to the users while the video is still loading.

Avoid CSS-in-JS

CSS-in-JS provides a great developer experience by keeping everything in one place. However, most libraries will put all your CSS in the style tag inside the page. That’s perfectly fine in the browser. However, when server-side rendering, you’ll get colossal HTML and pay in performance for its rendering. You’ll get only the CSS you need, but it’s not cachable, and the code to generate it will be in the JavaScript bundle.

There are zero-runtime CSS-in-JS libraries, like linaria, that will allow you to write your styles in the JS files and extract them to CSS files during the build. It even supports dynamic styling based on props using CSS variables under the hood.

We still prefer CSS modules, or instead SASS modules, for most projects as it provides the scoping, more straightforward media queries, and cooperation with designers.

Leverage Code-Splitting

JavaScript bundle size is one of the main contributors to slower load times of rich client-side applications. By default, webpack puts everything in a single bundle. It means that the browser always downloads all the code, even rarely used routes, configuration popups, and other stuff just in case. Heavy libraries like MapBox or Leaflet will be there either, even if they’re only used on some “contact us” route.

Code splitting is the best way to fight it.

You’ll have to lazy load parts of your code asynchronously and use them only after they’re ready.

React has Suspense and React.lazy to help you with that, and react-router also supports lazy routes.

Lazy loading is quite simple with pure client-side apps, but things get tricky when it comes to server-side rendering.

You only have one shot to render the page, so asynchronous code won’t work.

Everything’s that’s lazy-loaded on the client has to be ready for rendering ahead of time on the server.

Both React.lazy and Suspense are not yet available for server-side rendering.

While hacks built around loading the components dynamically with async import() on the client

and using sync require() on the server might work, some libraries do it better and more straightforward.

The most popular ones are react-loadable

and Loadable Components.

Both work fine on the server and the client.

Besides simple interface, they provide API to fine-tune what’s get rendered on the server and preload components on the client.

Imported Component is also an exciting library that

promises more features than the other two, works with Suspense, and uses React.lazy under the hood.

We haven’t used it in production, and it has much fewer weekly downloads than the mainstream ones,

so it’s up to you to decide if you would rely on it in your application.

Still, it’s worth playing around in our opinion.

Don’t be constrained by the route splitting.

Think a bit outside of the box when you have import() and component splitting.

IntersectionObserver or monitoring scroll position lets you lazy-load components below the fold,

and there’s even a react-loadable-visibility

library to help you when using react-loadable.

You can load heavy popups, SVGs, and even modules containing complex logic with import()!

Optimize NPM Dependencies

These days, 97% percent of code in modern web applications comes from npm modules. That means that developers are responsible for only 3%!

All this code contributes heavily to the bundle size and worth optimizing.

An Import Cost VS code extension lets you see both plain and gzipped sizes of module dependencies right in the editor. That’s worth looking at when adding a new package, but for optimizations, we recommend using the webpack-bundle-analyzer plugin. It generates an interactive treemap visualization of all bundles with their contents and lets you see which modules are inside and what makes the most space.

Some packages, like Material UI, have guidelines to optimize bundle size.

Modern bundlers support quite sophisticated tree shaking

that finds unreachable code and removes it from the bundle automatically.

While it does a pretty good job, it’s still worth reading the tips related to your modules.

For example, importing Material UI icons from @material-ui/icons will drastically increase build times compared to importing them directly.

Most modules have alternatives that do pretty much the same with smaller bundle sizes. Switching is not always worth it, especially if the library is coupled with the rest of the code. We recommend creating adapter components and functions with an interface similar to the old package but implemented using a new one for these cases.

It’s also worth removing duplicate dependencies that do pretty much the same thing, like mixing Ramda and Lodash. Large React applications often end up using multiple select libraries as they grow. React Select is fantastic, but it comes with an enormous bundle size for just a dropdown component. Developers start by reinventing the wheel with their custom select or using smaller libraries. As the project evolves, more complex features might be required which are not that easy to add, so they include another library that has it implemented. Refactoring old code requires new styling pretty much from scratch that’s boring and takes some time.

Self-Host Your Fonts

Using Google Fonts API or any other similar solutions enabling importing fonts into the project with just one simple line of code:

@import url("https://fonts.googleapis.com/css?family=font-name");That’s it! Now you can use the font in your CSS, and it would work perfectly.

However, this approach has some performance drawbacks. Let’s consider what happens when a browser loads your page. First, it fetches the HTML page, sees a link to the CSS file, and downloads it too. It would find import from the other domain in CSS, so it has to establish the connection, fetch the font’s CSS, and finally load the font files.

When multiple websites use the same fonts with the same configuration, there’s a high chance that the user already has the font in the browser cache. In this case, it won’t be downloaded and would save some time instead.

When self-hosting the fonts, the browser would find @font-face directly in the application’s CSS and would only have to download the font files using the same connection to your server or CDN. It’s much quicker and eliminates one fetch.

Google webfonts helper can automatically generate the CSS and let you download all the font files required. Installing fonts as npm packages is even more convenient. Fontsource project has packages for various popular fonts along with installation instructions.

Add Link Headers

What if, for some reason, you’ve decided to stick with Google Fonts? You can speed things up with

<link rel="preconnect" href="https://fonts.gstatic.com/" crossorigin>in your HTML.

It will instruct modern browsers to immediately connect the specified domain before finding the font’s @import,

so it’s ready for fetching assets sooner.

Of course, link hints aren’t specific to Google Fonts. They help speed up loading other third-party resources as well. Though it doesn’t make much sense for JavaScript files, they are already loaded from the document’s head.

Fortunately, there’s another variation of the link element -

the Link HTTP header.

Both are semantically equivalent, and while for processing the element browser has to download and parse the HTML,

the Link header would work straight away.

It’s much faster and can be used even to

prefetch or preload

critical resources from the same domain.

However, it requires some control over the webserver.

Most modern CDNs allow you to configure custom headers, and that might be enough for most scenarios.

Keep in mind that leaving unused Link hints is terrible since it forces the browser to do unnecessary operations.

Load External Scripts Asynchronously

After successfully implementing all the tips above, you might see that performance of your application is held down only by external scripts. Things like analytics, session recorders, and even widgets have an enormous effect on the load time. They are loaded in parallel together with the rest of the page, and while images improve user experience, those scrips aren’t that important to the users.

While you could remove some of them, having in-depth analytics is crucial for product and marketing teams to make the right decisions. The first optimization would be to make your script async. Then it will load and run entirely independently of the DOM and other code on the page.

This method partially fixes the problem as the browser would not wait for an async script to load and execute before displaying the page. However, downloading those scripts still consume bandwidth shared with more critical resources. Parsing and executing them also contributes to the overall CPU usage spike at the start of an application.

We manually add those scripts to the page in a few seconds after loading our website. Yes, we might lose a few sessions, and that’s why we don’t do it for Google Analytics, but for the rest of the scripts - it’s even good to filter out short sessions this way.

An approach similar to lazy loading components also works with widgets.

Just load them when they about to come in the viewport by monitoring scroll positions,

or even if they’re already in view using IntersectionObserver.

The latter would cause some layout shifts and content changes,

so consider using a loading indicator or load them anyways after a couple of seconds have passed since page load.

Test Everything

After applying all the best practices and optimizations mentioned above, it’s time to test individual pages of the website for potential issues and address them if necessary. It might be pretty tedious to do with PageSpeed since you’ll have to check one page at a time manually. Fortunately, there’s a better tool for it.

Experte PageSpeed can test hundreds of URLs automatically. All you have to do is enter a URL, and the tool crawls the website and determines the page speed scores for each subpage. The data is yielded identical to the data of the Google PageSpeed Insights. It’s free and can be used without registration.

The issues found will usually be caused by something heavy on a specific page. While images, videos, and most other resources under your control can be further optimized using the techniques above, sometimes the reason is embedding heavy content from other websites. Don’t go crazy with optimizations here, as user experience is much more important than a few points on a couple of pages.

Conclusion

Optimizing an existing application is quite a challenging task.

While you can easily incorporate minor tweaks like loading third-party scripts asynchronously, self-hosting fonts, or adding cache headers, even optimizing assets and their usage might be pretty time-consuming. Hopefully, everyone serves assets from a CDN nowadays, as it’s effortless to configure for any existing project.

You better make critical architectural decisions, like server-side rendering, code-splitting, and CSS-in-JS, at the beginning of the project. Changing it later introduces too many code changes and regressions. Choose your npm dependencies carefully, as swapping those later won’t be that easy.

Users love a snappy experience of fast, modern applications. Google and probably other search engines seem to penalize slow websites these days, and the bar keeps getting lower and lower.

If you need help optimizing your application or building it with performance in mind from the start, contact us, and we’ll be happy to answer all your questions.